- A good example of anti-SLAPP legislation done right - December 15, 2023

- A simpler, faster way to handle defamation actions - October 11, 2023

- ChatGPT’s credibility undermined by ‘hallucinations’ - July 26, 2023

By Paul Russell, LegalMatters Staff • While ChatGPT is being embraced for its ability to quickly provide users with answers to virtually any question, its well-documented tendency to produce erroneous information can seriously damage a person’s reputation, says Toronto defamation lawyer Howard Winkler.

“If an artificial intelligence (AI) platform publishes or creates content that is false or is a ‘hallucination,’ as the industry refers to it, that can lead to significant harm,” says Winkler, principal and founder of Winkler Law. “In those cases, courts will likely be inclined to fashion a remedy to provide compensation”.

“It was only a matter of time for a defamation claim to be launched against ChatGPT, and that time has come,” he adds, referring to a lawsuit filed in Georgia.

According to a media report, Georgia radio host Mark Walters claims that the AI platform produced the text of a legal complaint that accused him of embezzling money from a gun rights group.

‘The AI-generated complaint … is entirely fake’

“The problem is, Walters says, he’s never been accused of embezzlement or worked for the group in question,” the report states. “The AI-generated complaint, which was provided to a journalist using ChatGPT to research an actual court case, is entirely fake.”

“This is the first case in the United States where an AI platform has faced legal action for publishing defamatory content,” Winkler tells LegalMattersCanada.ca. “It is interesting in the Canadian context since one of the issues that will arise in the Georgia case is whether open AI platforms benefit from a defence under Section 230 of the American Communications Decency Act.

He explains that s.230 protects American internet platforms from legal liability for any content their users create.

“That defence would not apply in Canada or other common law jurisdictions outside of the United States,” Winkler adds.

Anyone using ChatGPT can enter a question with the AI platform producing an answer that is delivered “with a apparent certainty,” he says.

ChatGPT expresses lies ‘with confidence’

“ChatGPT expresses its responses, and its lies, with confidence,” Winkler says. “It doesn’t provide footnotes or source material that someone can double-check. It simply represents the information it provides as truth.”

Reports of alleged “hallucinations” of fake facts generated by ChatGPT have emerged from around the globe.

“An Australian mayor made news in April when he said he was preparing to sue OpenAI because ChatGPT falsely claimed that he was convicted and imprisoned for bribery,” the article notes.

- In Australia, Google has the last laugh – maybe

- Smart contracts: Bringing peace & order to the metaverse’s ‘wild west’

- The metaverse is the new wild west when it comes to the law

In the United States, a federal judge imposed US$5,000 fines on two lawyers and a law firm after ChatGPT was blamed for their submission of fictitious legal research in an aviation injury claim.

“Technological advances are commonplace and there is nothing inherently improper about using a reliable artificial intelligence tool for assistance,” the judge wrote, according to a media report. “But existing rules impose a gatekeeping role on attorneys to ensure the accuracy of their filings.”

No tolerance for AI mistakes in court submissions

Winkler says it was appropriate for the lawyers and their firm to be hit with a reprimand and financial penalties.

“There is no excuse for including citations in court material without first verifying what is being cited,” he says. “The Court was trying to not only criticize the lawyers for their behaviour but also to indicate what is the appropriate standard for legal professionals using AI as a research tool. Relying on AI-generated responses without checking them is unacceptable.

In Manitoba, a chief justice issued a practice direction that states lawyers have to disclose if they used AI to craft their submissions.

“The chief justice was wise to issue that direction,” says Winkler. “AI is a new source of information but it is far from trustworthy, so it is appropriate for the courts to provide some guidance on its use.”

Going forward, he says the “real interesting question is whether the lies promulgated by an AI response to an inquiry will constitute a publication which can then lead to liability for defamation.”

“With ChatGPT, the answer to a query may only be published to one person, but the people who designed the AI platform know or ought to know that the responses could gain wide circulation, which then opens up the prospect of significant damages,” Winkler adds.

He says one mitigating factor for ChatGPT is that the firm expressly admits that the product can produce some false information.

ChatGPT admits it ‘hallucinates’ facts

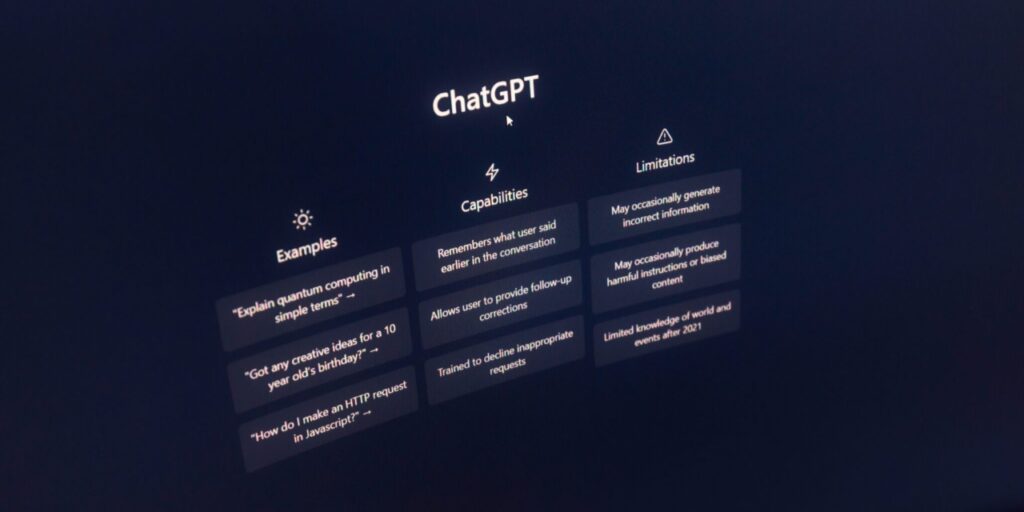

Under the subtitle “Limitations,” the ChatGPT website notes, “Despite its capabilities, GPT-4 … is not fully reliable (it “hallucinates” facts and makes reasoning errors). Great care should be taken when using language model outputs.”

“Is this disclaimer sufficiently brought to the attention of users?” Winkler asks. “Are most people aware that AI platforms may produce lies, so great care should be taken before republishing anything that’s given as a response to a query?

“From a legal perspective, it is uncertain as to whether that disclaimer will be sufficient,” he adds.

In the Georgia case and other lawsuits to follow, “there is a likelihood of liability against these AI platforms,” Winkler says. “I don’t believe a simple disclaimer hidden in terms of use will be sufficient to protect them from legal consequences.”

He says that while ChatGPT should make more of an effort to make users aware of the risk of receiving false information in responses, which may hurt the reputation of the platform.

“Admitting that many responses are fabricated or hallucinations can occur will undermine the functional use and credibility of the AI platform,” Winkler says.

He acknowledged that part of the problem is that the design of artificial intelligence platforms “is really in its infancy. Time will tell whether future versions will be more reliable.”